Will robots soon write our students’ essays? With OpenAI unveiling ChatGPT in November 2022, this question has never been more pressing. This marvel of AI not only became the fastest adopted ed-tech tool ever, but by March 2023, Microsoft had seamlessly integrated it into its Bing search engine and essential software like Word, Excel, and PowerPoint (Dotan, 2023). Amidst this meteoric rise, the international TEFL community finds itself at a crossroads, grappling with the idea of AI possibly penning the reports and essays they assign.

As AI-powered tools rapidly become ubiquitous among students, educators have begun to discuss the initial implications, limitations, threats, and opportunities of AI for educational practice in general (e.g., Farrokhnia et al., 2023; Van Gompel, 2023) and language teaching and learning in particular (e.g., Hockly, 2023; Kohnke et al., 2023; Raine, 2023). Van Gompel (2023) suggests that educators should proactively respond by putting some guardrails in place. She offers several specific tactics including (a) developing academic integrity policies that clearly articulate acceptable uses of AI and best practices for AI in classrooms, (b) developing writing assignments and associated scoring tools that are resistant to student misuse of AI, and (c) leveraging the writing process in ways that make it much less likely for students to misuse AI. A recent survey of 100 U.S. universities found that a majority (51%) are allowing individual instructors to decide on AI policies (Caulfield, 2023b). However, efforts to develop tools that detect AI-generated content show major limitations: no AI detector comes close to 100% accuracy, such tools can sometimes produce false positives, and text generated by OpenAI’s latest model (GPT-4) is increasingly harder to detect (Caulfield, 2023a). Also, as Liang et al. (2023) demonstrate, this is particularly problematic for teachers of L2 learners as the current suite of detectors is biased against non-native writers. Liang et al. found that GPT detectors are more likely to flag text written by non-native speakers as AI-generated, even when it is not. This could be due to linguistic features typical in non-native writing, which might be misinterpreted by the detectors. Taken together, these recent developments highlight the increasing use of AI in education and suggest a crucial role for educators in guiding its ethical use.

Benefits of AI in Education

The pedagogical benefits of AI tools like ChatGPT include providing linguistic input and interaction, personalized feedback and practice, and creating texts in various genres and complexities (Kohnke et al., 2023). Recent research suggests that GPT-based automated essay scoring with GPT is both reliable and consistent, capable of providing detailed feedback that can enhance the student learning experience (Mizumoto & Eguchi, 2023). In a recent Wired column, Raine (2023) suggested productive uses of ChatGPT, such as generating easy reading passages with accompanying activities. He also pointed out risks and limitations, including potential AI-assisted plagiarism and the occasional inaccuracies in AI-generated content (Raine, 2023). The current article expands on previous discussions, emphasizing the ethical and effective integration of AI tools in academic writing classes. We argue for a proactive approach by educators, which involves several key strategies, including the following:

Curriculum integration: Thoughtfully integrate AI tools into the curriculum, ensuring they complement and enhance traditional teaching methods rather than replace them.

Establishing guidelines: Implement clear guidelines and policies that dictate the appropriate use of AI in academic settings, helping to prevent misuse like plagiarism.

Monitoring and evaluation: Continuously monitor the impact of AI tools on student learning and adjust strategies as needed to ensure they are meeting educational objectives.

By adopting these strategies, educators can guide students to use AI tools responsibly, preparing them for a future where AI is increasingly integrated into various aspects of life. To aid in this process, we propose a set of “golden rules” for students to follow and provide examples of acceptable uses of AI in academic contexts.

Golden Rules for Responsible, Effective AI Use for Academic Purposes

To ensure responsible AI use in academia, we propose the following golden rules. While adaptable based on specific needs, these serve as a general guide covering ethical and effective use across various contexts:

Rule 1: Always provide AI with clear and specific prompts. If the output is not as expected, refine your prompt for better results.

Rule 2: Treat AI-generated content as a draft requiring your critical review and personal input. Remember to modify the content to prevent plagiarism and correct any possible grammatical inaccuracies. Relying solely on AI for completing your assignments not only risks academic dishonesty but also undermines the development of your own writing skills.

Rule 3: If you have used AI assistance, acknowledge its role and the prompts employed. Always follow your school’s academic honesty policies.

Rule 4: Always verify the accuracy of AI-generated information. Assume potential inaccuracies and cross-check with reliable sources. Avoid citing AI as a factual source, and remember, you bear the responsibility for any errors.

Rule 5: Fully understand the AI-generated content before integrating it into your work. Think about its relevance and appropriateness for your assignment.

Rule 6: Use AI with academic integrity. If you are unsure what is allowed in your class, ask your instructor.

Rule 7: When interacting with AI tools, always avoid sharing personal or sensitive information. This includes not only personal data, such as your name, address, and identification numbers, but also sensitive intellectual property or confidential information related to research and academic work. Remember that AI systems, including ChatGPT, are not designed to handle confidential information and may operate on servers where data could potentially be accessed or stored.

Facilitating the Writing and Speaking Process With Generative AI

Having set forth golden rules for AI use, it is crucial to demonstrate practical, acceptable applications of such tools. Here, we use ChatGPT’s GPT-3.5 model to show how it can enhance university-level academic writing and speaking. While GPT-4 is now available for paid ChatGPT Plus users we have chosen to focus on the version that is freely available, as this will probably be the software used by most students. It is also worth noting that GPT-4 is freely available in the Microsoft Edge web browser. These examples are adaptable to various contexts.

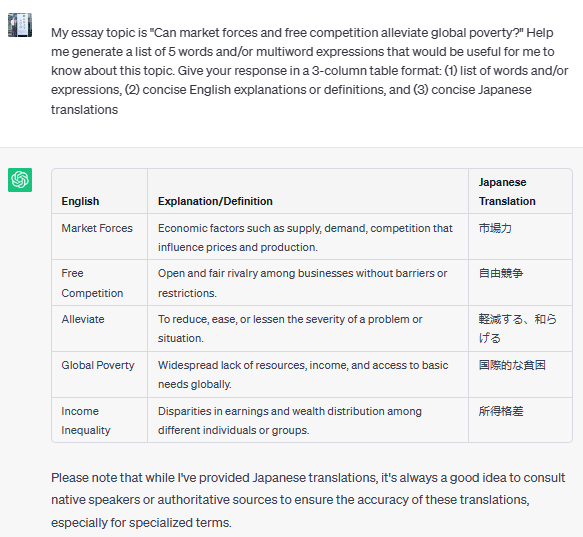

Even though Japanese translations were requested in the example in Appendix 1, the approach can be tailored for any language, offering students an efficient way to enhance their vocabulary. When students are writing their initial drafts, they can be encouraged to not only include these keywords but also search the literature to find out how other authors are defining these terms.

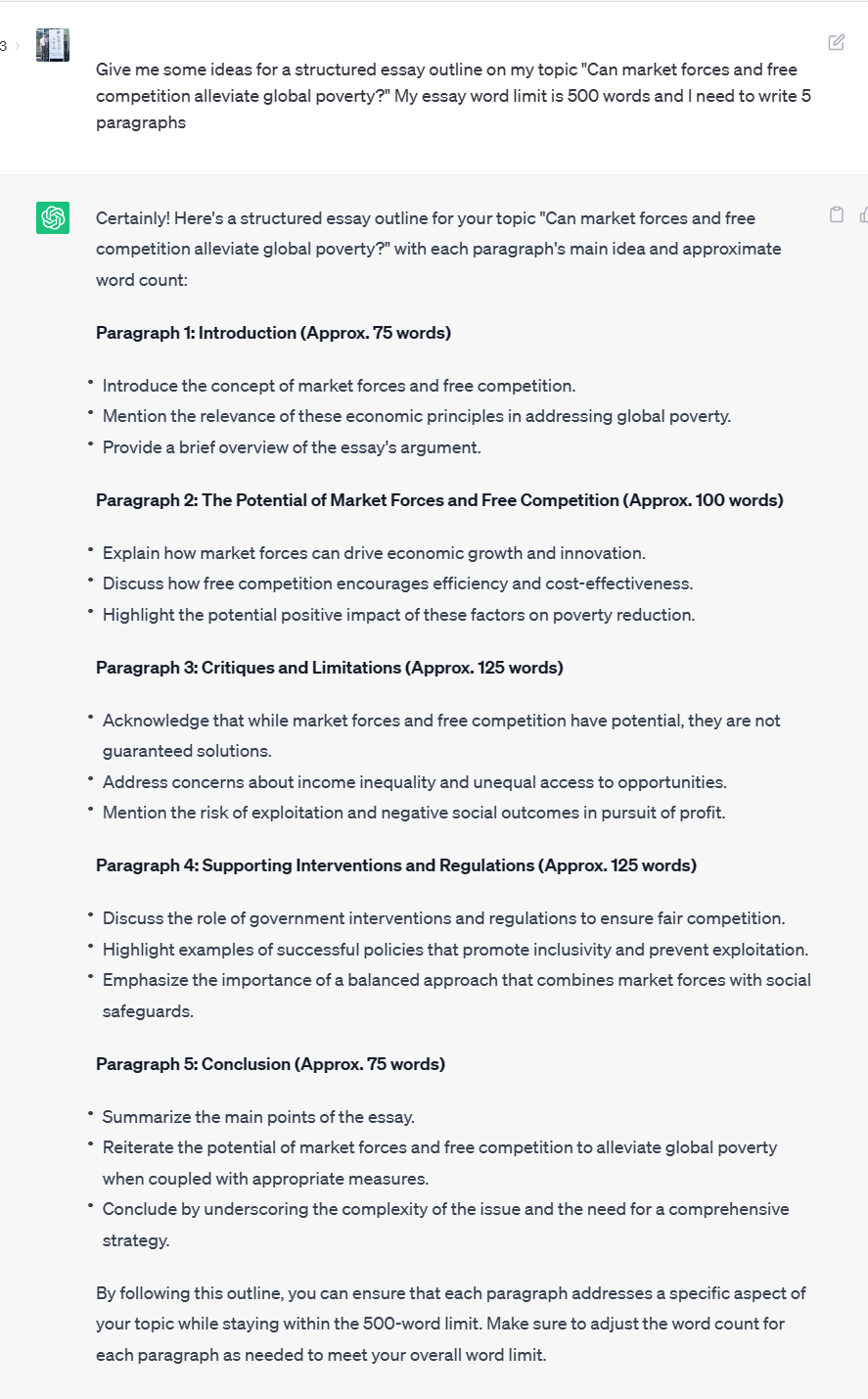

Additionally, AI can be prompted to assist with tasks like formulating a thesis statement, structuring arguments, and addressing counterarguments. Such tasks might not be required for a short 500-word essay (as in our example in Appendix 2), but for longer essays (over 1000 words) students could benefit from using AI to generate examples to compare with their own attempts.

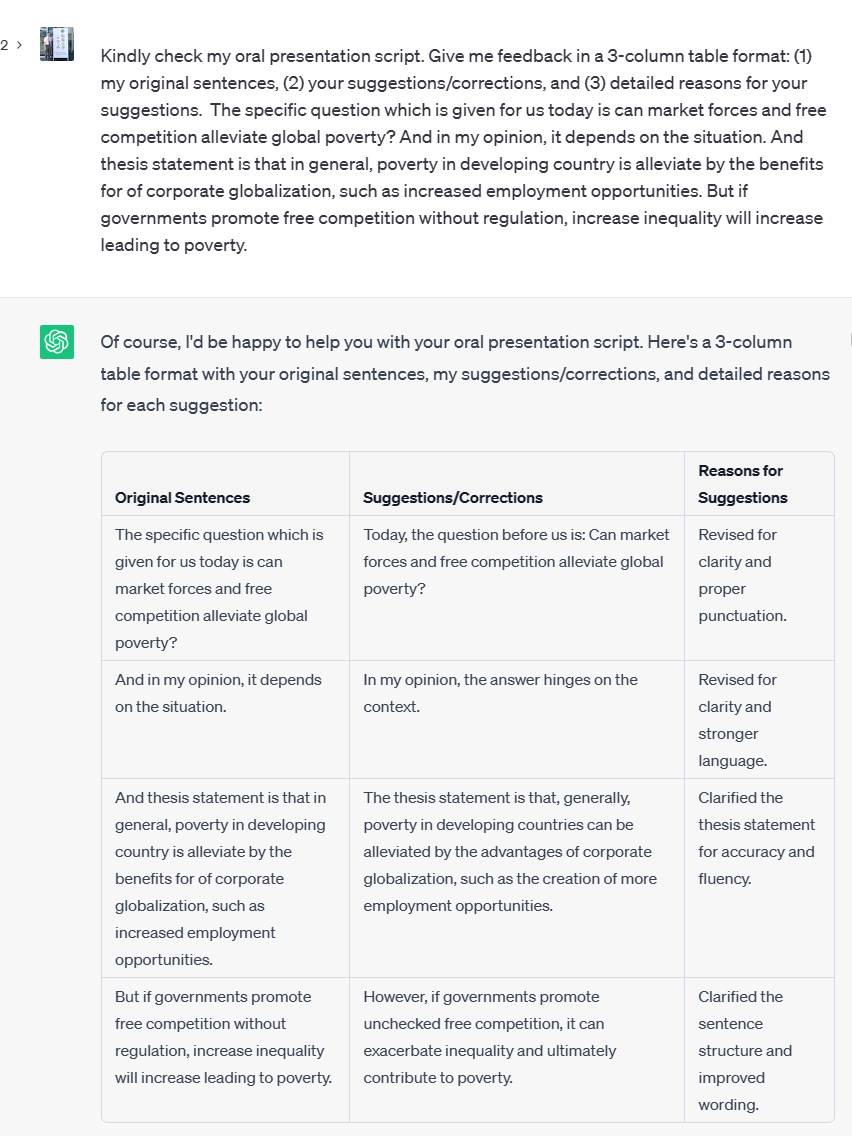

As shown in Appendix 3, GPT-3.5 offers detailed, personalized feedback. All of the above examples show the versatility of generative AI in academic contexts, hinting at further potential uses.

Conclusion

Generative AI holds significant potential for enhancing L2 students’ writing skills, but its integration into tasks that teachers currently use to learn and practice these skills requires careful consideration. By setting clear guidelines and demonstrating acceptable uses, educators can promote ethical AI use, mitigating risks such as plagiarism. The goal is to have AI augment and develop human expertise, not replace it. As generative AI’s presence grows in academia, educators must steer its ethical and effective application. Ignoring AI is not really an option, and neither is trying to ban it. We would therefore argue that it is better to embrace this new technology and demonstrate to students ways that it can complement their language studies and broaden their horizons. This article underscores the importance of golden rules and practical examples, aiding students in harnessing AI to enrich their language skills, develop original content, and attain improved accuracy and fluency. The ability to harness AI tools ethically and effectively will be a key competency that students will need in the future workforce.

References

Caulfield, J. (2023a, September 6). Best AI detector: Free & premium tools compared. Scribbr. https://www.scribbr.com/ai-tools/best-ai-detector/

Caulfield, J. (2023b, November 21). University policies on AI writing tools: Overview & list. Scribbr. https://www.scribbr.com/ai-tools/chatgpt-university-policies/

Dotan, T. (2023, March 16). Microsoft adds the tech behind ChatGPT to its business software. The Wall Street Journal. https://www.wsj.com/articles/microsoft-blends-the-tech-behind-chatgpt-in...

Farrokhnia, M., Banihashem, S. K., Noroozi, O., & Wals, A. (2023). A SWOT analysis of ChatGPT: Implications for educational practice and research. Innovations in Education and Teaching International. 1–15. https://doi.org/10.1080/14703297.2023.2195846

Hockly, N. (2023). Artificial intelligence in English language teaching: The good, the bad and the ugly. RELC Journal, 54(2), 445–451. https://doi.org/10.1177/00336882231168504

Kohnke, L., Moorhouse, B. L., & Zou, D. (2023). ChatGPT for language teaching and learning. RELC Journal, 54(2), 537–550. https://doi.org/10.1177/00336882231162868

Liang, W., Yuksekgonul, M., Mao, Y., Wu, E., & Zou, J. (2023). GPT detectors are biased against non-native English writers. Patterns, 4(7), 1–4. https://doi.org/10.1016/j.patter.2023.100779

Mizumoto, A., & Eguchi, M. (2023). Exploring the potential of using an AI language model for automated essay scoring. Research Methods in Applied Linguistics, 2(2), 1–13. https://doi.org/10.1016/j.rmal.2023.100050

Raine, P. (2023). ChatGPT: Initial implications for language teaching and learning. The Language Teacher, 47(2), 38–41. https://doi.org/10.37546/JALTTLT47.2

Van Gompel, K. (2023, March 2). Five ways to prepare writing assignments in the age of AI. Turnitin. https://www.turnitin.com/blog/five-ways-to-prepare-writing-assignments-i...

Appendix 1

Use of AI to Generate Bilingual Vocabulary and Key Expression Lists on Any Topic

Appendix 2

Use of AI to Draft a Structured Essay Outline

Note. This is a truncated version of the results for brevity.

Appendix 3

Use of AI for Simulated Peer Review, Obtaining Structured Feedback on Drafts